Projects

The overarching theme of my research is designing and evaluating accessible interactions with emerging technologies such as wearables and AI-infused applications. The majority of my work has focus on technologies that benefit people with visual impairments such as exploring interactions with wearables for directional feedback or exploring interactions with speech and image recognition systems, which are error prone as any automation systems. To better contextualize my findings and contribute to inclusive interactions that can benefit a larger population, sighted participants are often included in my studies.

Teachable Interface for Personalizing an Object Recognizer

The projects in this research aim to improve the user interface of machine teaching for non-experts. Through a user study, I examined end-users’ perception of machine teaching through a quantitative and qualitative analysis so that we can understand how non-experts train a machine learning model using a teachable interface. As a machine teaching application, I built a teachable object recognizer using an Inception-v3 model and transfer learning in this study. In addition, as an effort to facilitate machine teaching for end-users, I led a project to create a user interface that generates descriptors of a training set so that a blind user can understand the training set based on the them when they fine-tune an object recognizer with their own photos as a training set. The descriptors are generated using computer vision techniques such as hand detection, image quality assessment, and SIFT feature extraction/matching.

Publications

Blind Users Accessing Their Training Images in Teachable Object Recognizers

Jonggi Hong, Jaina Gandhi, Ernest Essuah Mensah, Farnaz Zeraati, Ebrima Jarjue, Kyungjun Lee, Hernisa Kacorri. ASSETS 2022.

Crowdsourcing the Perception of Machine Teaching

Jonggi Hong, Kyungjun Lee, June Xu, Hernisa Kacorri. CHI 2020.

Revisiting Blind Photography in the Context of Teachable Object Recognizers

Kyungjun Lee, Jonggi Hong, Ebrima Jarjue, Simone Pimento, Hernisa Kacorri. ASSETS 2019.

Exploring Machine Teaching for Object Recognition with the Crowd

Jonggi Hong, Kyungjun Lee, June Xu, Hernisa Kacorri. CHI EA 2019.

Accessible Human-Error Interactions in AI Applications for the Blind

Jonggi Hong. UbiComp DC 2018.

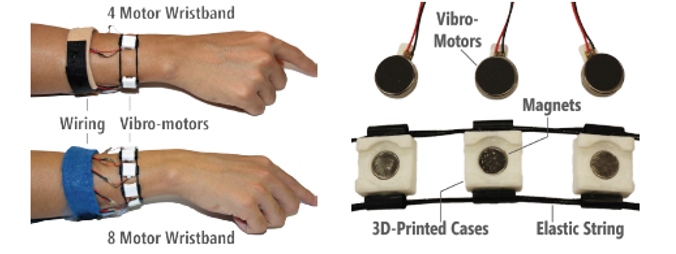

Identifying Speech Recognition Errors with Audio-only Interactions

I explored the challenges of identifying automatic speech recognition errors for people with visual impairments. Due to the similarity between the text-to-speech of the original input and the misrecognized texts, blind users may miss the errors when they review the speech recognition results with text-to-speech. Therefore, I explored the experience of reviewing and identifying errors through qualitative analysis (a semi-structured interview and thematic coding) and evaluated the users’ ability of identifying errors with text-to-speech through quantitative analysis. In addition, as a part of accessibility research, I designed and created a prototype of haptic wristbands that can indicate direction with the vibrations around a wrist. Through a user study with statistical analysis, we established a design of a haptic wristband that allows a blind user to trace a path or find a target on a surface based on the directional guidance.

Publications

Reviewing Speech Input with Audio: Differences between Blind and Sighted Users

Jonggi Hong, Christine Vaing, Hernisa Kacorri, Leah Findlater. TACCESS 2020.

Identifying Speech Input Errors Through Audio-Only Interaction

Jonggi Hong, Leah Findlater. CHI 2018.

Correcting Errors in Speech Input During Non-visual Use

Jonggi Hong, Leah Findlater. CHI Workshop 2017.

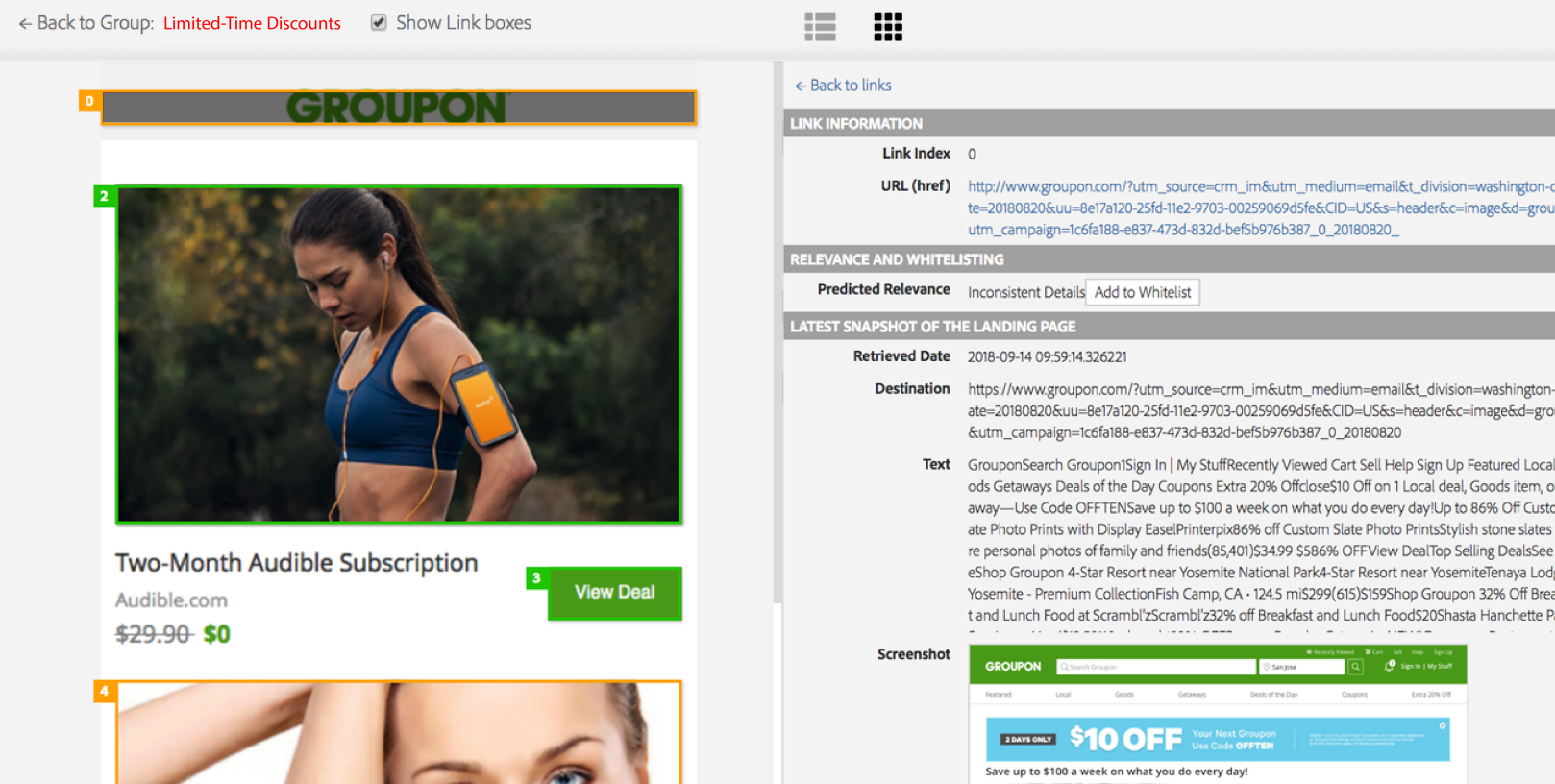

Detecting of Misalignments Between Promotion Links and Landing Pages

At Adobe Research, I developed a machine learning model that classifies the semantic relationship between a link and a landing page. Beyond detecting the “Page not found” error from the server, it analyzes the relationship to detect the landing pages with semantic problems such as outdated information, irrelevant information, and having unexpected additional steps. In this project, I collected and cleaned the data for machine learning. The classifiers are built and evaluated using SVM, Random Forest, and neural network. This project is a part of research to build a system that helps online marketers managing their emails with links to external web pages. This system is expected to help them to identify the misaligned links and resolve the problems in the links or landing pages quickly.

Publication

Identifying and Presenting Misalignments between Digital Messages and External Digital Content

Tak Yeon Lee, Jonggi Hong, Eunyee Koh. US Patent App. 16/419,676

Interacting with Wearable Devices

The wearable and mobile devices can enable novel interactions for people with disabilities. They can also enhance the interfaces of augmented reality applications and smart watches.

Publications

Evaluating Wrist-Based Haptic Feedback for Non-Visual Target Finding and Path Tracing on a 2D Surface

Jonggi Hong, Alisha Pradhan, Jon E. Froehlich, Leah Findlater. ASSETS 2017.

The Cost of Turning Heads: A Comparison of a Head-Worn Display to a Smartphone for Supporting Persons With Aphasia in Conversation

Kristin Williams, Karyn Moffatt, Jonggi Hong, Yasmeen Faroqi-Shah, Leah Findlater. ASSETS 2016

Evaluating Angular Accuracy of Wrist-based Haptic Directional Guidance for Hand Movement

Jonggi Hong, Lee Stearns, Tony Cheng, Jon E. Froehlich, David Ross, Leah Findlater. GI 2016.

Comparison of Three QWERTY Keyboards for a Smartwatch

Jonggi Hong, Seongkook Heo, Poika Isokoski, and Geehyuk Lee. IWC 2016.

TouchRoller: A Touch-sensitive Cylindrical Input Device for GUI Manipulation of Interactive TVs

Jonggi Hong, Hwan Kim, Woohun Lee, Geehyuk Lee. IWC 2015.

SplitBoard: A Simple Split Soft Keyboard for Wristwatch-sized Touch Screens

Jonggi Hong, Seongkook Heo, Poika Isokoski, Geehyuk Lee. CHI 2015.

Smart Wristband: Touch-and-motion–tracking Wearable Input Device for Smart Glasses

Jooyeun Ham, Jonggi Hong, Youngkyoon Jang, Seung Hwan Ko, Woontack Woo. HCII 2014.

TouchShield: A Virtual Control for Stable Grip of a Smartphone Using the Thumb

Jonggi Hong, Geehyuk Lee. CHI EA 2013.